The ERS Data System’s Inter-rater Reliability module allows you to assess a room, compare your scores with other raters’ scores, and determine consensus scores. The software will automatically calculate the overall reliability of each assessor, without requiring an internet connection.

It also enables you to generate classroom reports based on consensus scores, without losing your original scores. Assessors who do not have the ERS Data System may still participate in the reliability process, provided that one of the raters has the software.

Important Concepts:

Lead Assessor:

The assessor who is responsible for authoring a Summary Report for the classroom, giving the facility feedback on the assessment. This assessor may also be primarily responsible for assessments at this facility, or in this facility’s region.

Co-Raters:

The assessors who are rating the classroom along with the Lead Assessor.

Guest Assessor:

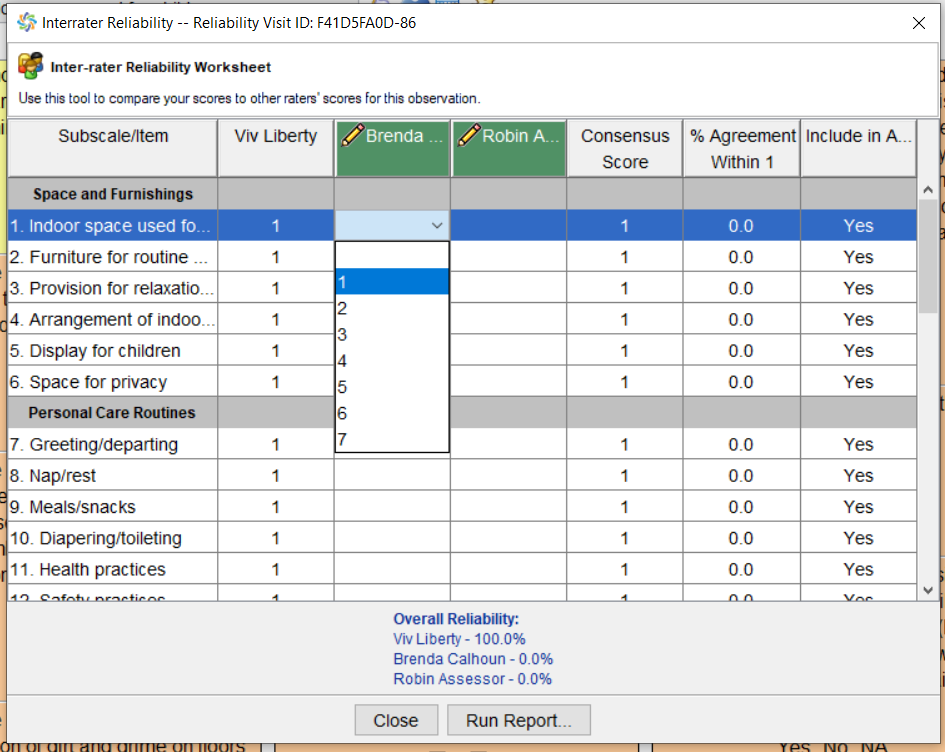

A co-rater who is not in the Lead Assessor’s Assessor Lookup, and should not be added. This assessor may be performing the assessment on paper, may be a member of a different organization, and/or may not have the ERS Data System.

Consensus Observation:

Automatically created by the software from the Lead Assessor’s completed

observation, the consensus observation reflects all of the consensus Item scores and Indicator answers. Any feedback reports for the facility should be generated from this observation.

Reliability Observation:

The Lead Assessors’ and Co-Rater(s)’s personal version of the observation. The reliability observation must be uploaded by all participants in the reliability process. Once the reliability process has begun, no changes can be made to the reliability observations.

The Process:

- Assessors perform observations as usual, and ensure they’re complete.

- Assessors get together after the assessment to do the inter-rater reliability check.

- They determine who the lead assessor is.

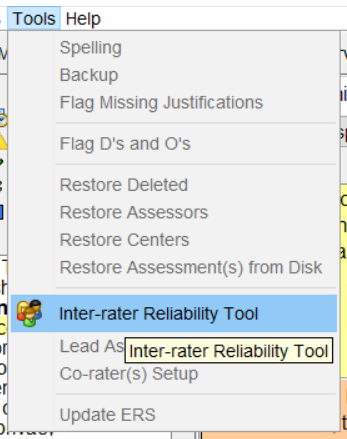

- The lead assessor opens the Inter-Rater Reliability Tool from the top menu:

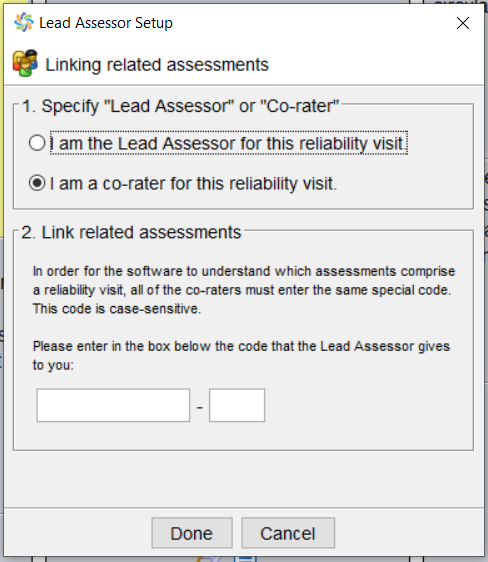

5. The lead assessor selects “I am the lead assessor for this reliability visit” and receives the reliability ID code.

6. The Co-Raters select “I am a co-rater for this reliability visit” and enter the code given to them by the lead assessor.

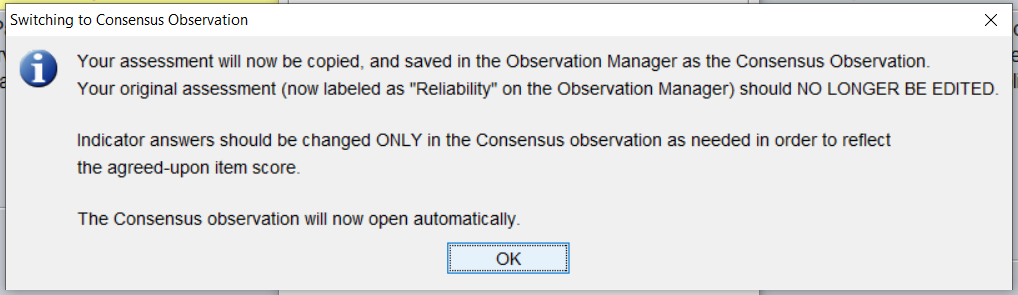

The following message will display on the lead assessor’s screen. Click “ok” to continue to the consensus observation.

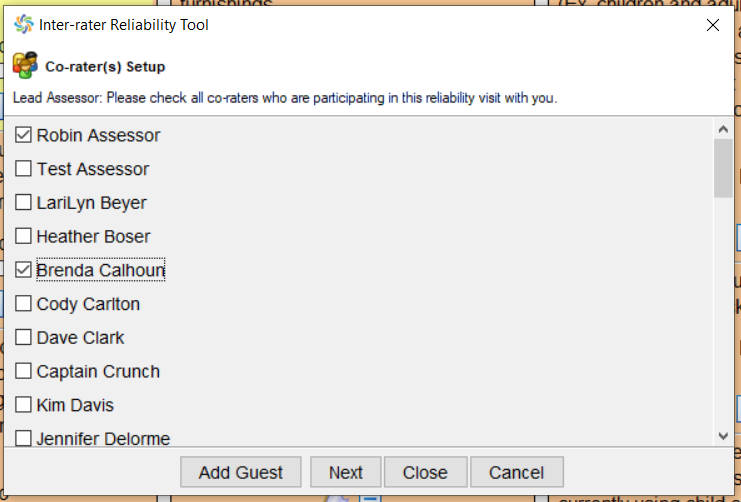

7. The lead assessor adds the co-raters to the consensus observation.

8. Assessors discuss, item by item, what the indicator answers and item scores should have been. The lead assessor records the co-raters scores and any changes in the consensus observation scores in the IR Reliability tool window.

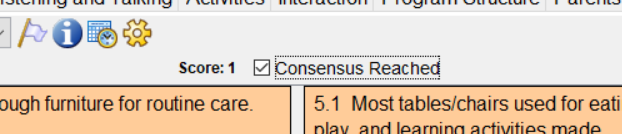

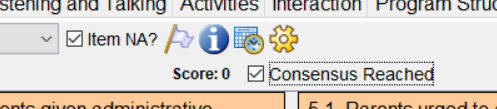

9. When a consensus has been reached the lead assessor must check the “consensus reached” box for each item in the observation. *Even items marked “N/A” must also be checked off in order for the observation to achieve “Complete” status*

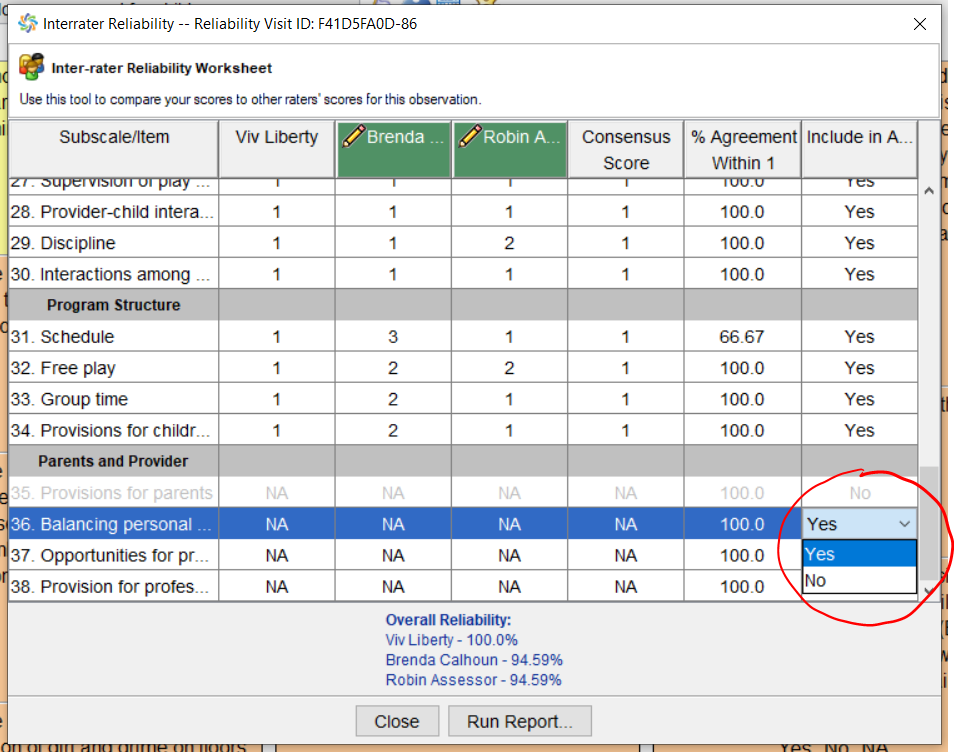

10. When consensus has been reached for all items, the lead assessor generates the Inter-rater Reliability Report, and distributes it to the participants. This report shows the overall reliability of each assessor.

The lead assessor can select which items to include in the report from within the Inter-Rater Reliability tool.

11. Assessors upload their observations as usual. Co-raters should remember to add the Reliability code to the assessment prior to uploading.

12. Supervisors may review all observations, and give feedback to the consensus observation as necessary, in order to produce an acceptable summary report to give the facility.